Arquitecture and Components¶

OMniLeads is a multi-component based application that resides in individual GitLab repositories, where the code is stored source and / or configuration, build, deploy and pipelines scripts CI / CD.

Although when executing an instance of OMniLeads the components interact as a unit through TCP / IP connections, the reality is that each one is its own entity with its GitLab repository and DevOps cycle.

At the build level, each component is distributed from RPMs (for installations on Linux) and Docker-IMG (for installations on Docker Engine). Regarding the deploy, each component has a script first_boot_installer.tpl, with which it can be invoked as Provisioner and thus easily deploy on a Linux host way automated, or run manually by editing variables about the script in question.

We can think of each component as a piece of a puzzle withits attributes:

Description of each component¶

Each component is described below:

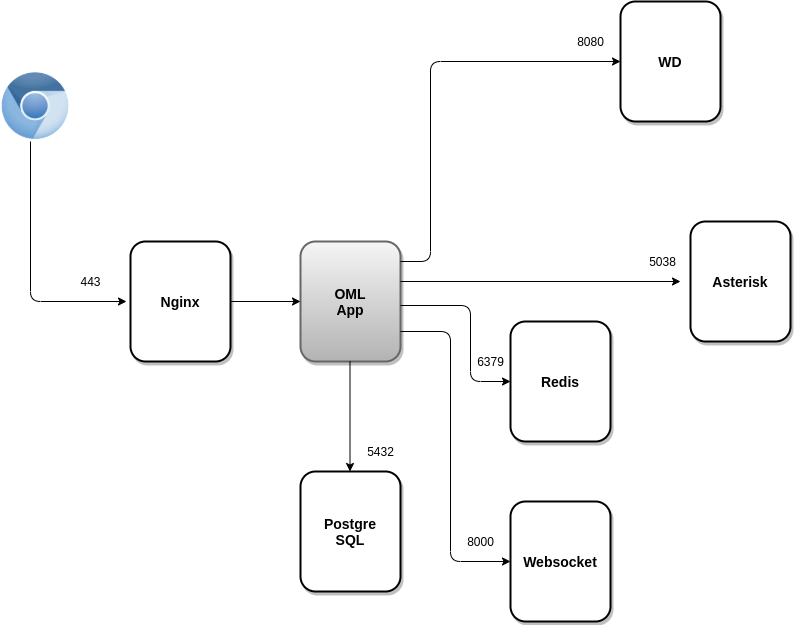

OMLApp (https://gitlab.com/omnileads/ominicontacto): The web application (Python / Django) is contained in OMLApp.

Nginx is the webserver that receives HTTPS requests and redirects such requests to OMLApp (Django / UWSGI). OMLApp interacts with various components, either to store / provision configuration as well as in the generation of calls, or at the time of returning Agent / Campaign Monitoring and Report Views.

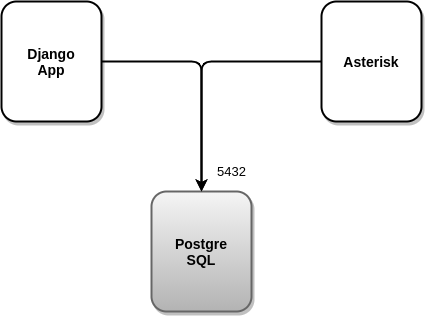

OMLApp uses PostgreSQL as SQL engine, Redis as cache and for provision Asterisk configuration, either via files .conf as well as generating certain key / value structures that are consulted by Asterisk in real time when processing calls about campaigns. OMLApp connects to the Asterisk AMI interface for generating calls and reloading some other configuration. It also makes connections to the WombatDialer API when necessary generate campaigns with predictive dialing.

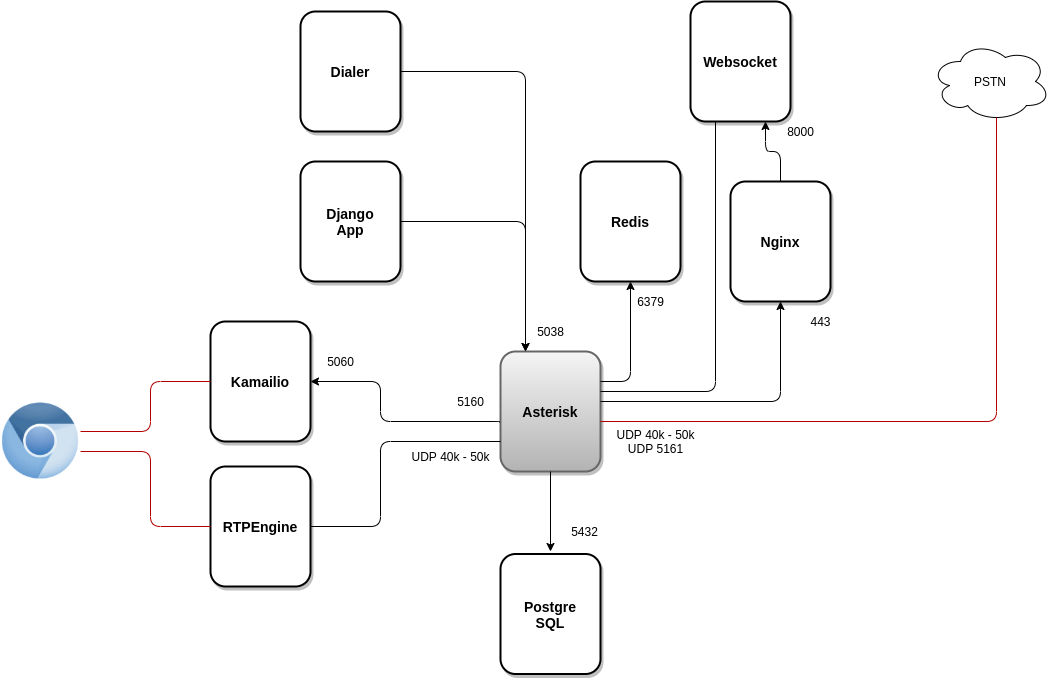

- Asterisk (https://gitlab.com/omnileads/omlacd): OMniLeads is based on the Asterisk framework as the basis of the ACD (Automatic Distributor of Calls). It is responsible for the implementation of business logic (campaigns telephone numbers, recordings, reports and channel metrics telephone). At the networking level, Asterisk receives AMI requests from OMLApp and from WombatDialer, while needs to run connections towards PostgresSQL to leave logs, towards Redis to query parameters of campaigns provisioned from OMLApp, and also to access Nginx for the establishment of the Websocket used to bring the content of configuration files contained in Asterisk (etc / asterisk) and generated from OMLApp.

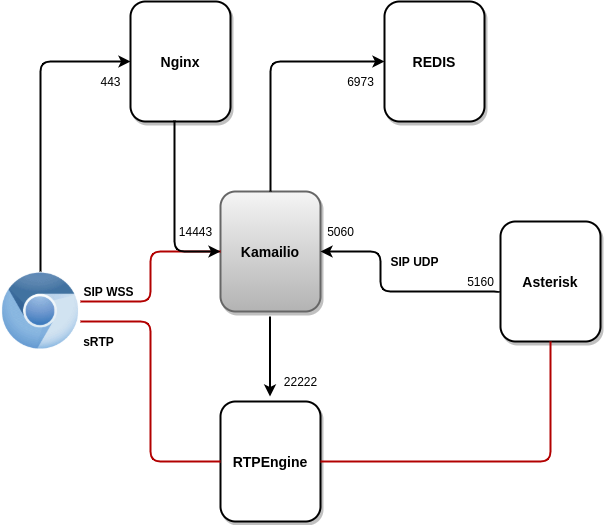

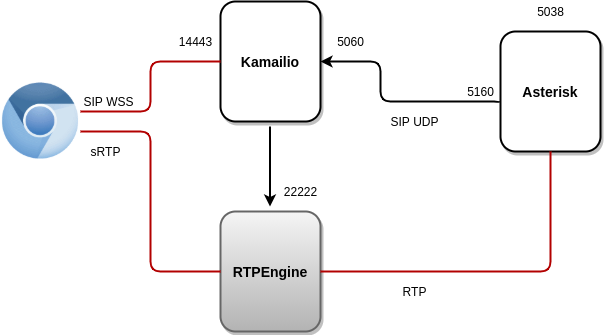

Kamailio (https://gitlab.com/omnileads/omlkamailio): This component is used in conjunction with RTPEngine (WebRTC bridge) at the time of managing WebRTC (SIP over WSS) communications against agents, while holding sessions (SIP over UDP) against Asterisk. Kamailio receives the REGISTERs generated by the webphone (JSSIP) from the Agents, therefore takes care of the work of * registration and locating * users using Redis to store the address of network of each user.

For Asterisk, all agents are available at the URI of Kamailio, so Kamailio receives INVITEs (UDP 5060) from Asterisk when it requires locate some agent to connect a call.Finally, it is worth mentioning the fact that Kamailio generates connections towards RTPEngine (TCP 22222) requesting an SDP at the time of establishing SIP sessions between Asterisk VoIP and WebRTC agents.

- RTPEngine (https://gitlab.com/omnileads/omlrtpengine): OMniLeads supports RTPEngine at the time of transcoding and bridge between the WebRTC technology and VoIP technology from the audio point of view of the. Component maintains audio channels sRTP-WebRTC with Agent users on the one hand, while establishing channels on the other RTP-VoIP against Asterisk. RTPEngine receives connections from Kamailio to port 22222.

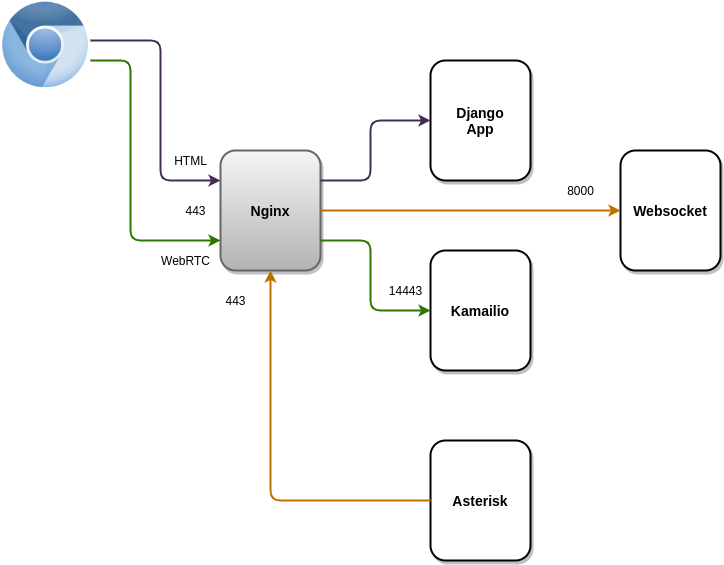

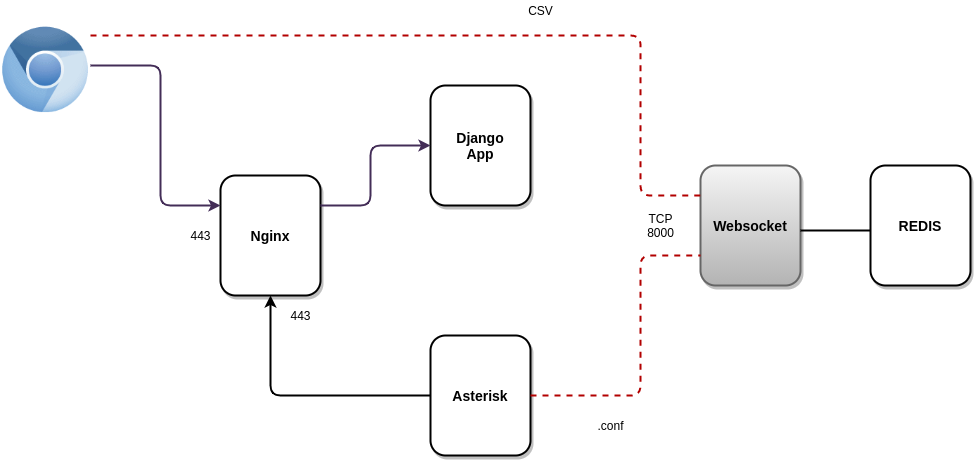

- Nginx (https://gitlab.com/omnileads/omlnginx): The web server of the project is Nginx, and its task is to receive the requests TCP 443 by part of the users, as well as from some components like Asterisk. From one hand, Nginx is invoked every time a user accesses the URL of the deployed environment. If user requests have as destination render some view of the web application Django, then Nginx redirects the request to UWSGI, while if the user requests are destined to the REGISTER of their webphone JSSIP, then Nginx redirects the request to Kamailio (for setting a websocket SIP ). Also, *Nginx is invoked by Asterisk when setting websocket against Websocket-Python from OMniLeads, provisioning the provided configuration from OMLApp.

Python websocket (https://gitlab.com/omnileads/omnileads-websockets): OMniLeads relies on a websockets server (based on Python), used to leave background tasks running (reports andgeneration of CSVs) and receive an asynchronous notification when the task has been completed, which optimizes the performance of the application. It is also used as a bridge between OMLApp and Asterisk in provisioning the configuration of .conf files (etc / asterisk).

When starting Asterisk, a process coonects the websocket against component, and from there, it receives notifications every time configuration changes are provided. In your default configuration , it raises the port TCP 8000, and the connections received are always redirected from Nginx.

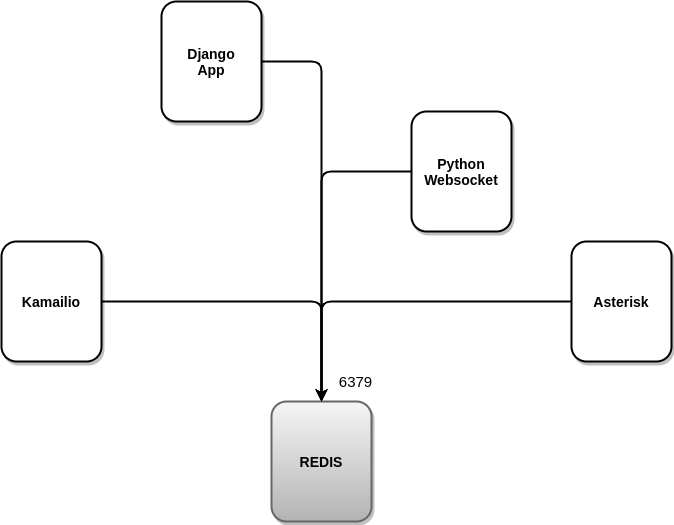

- Redis (https://gitlab.com/omnileads/omlredis): Redis is used with 3 very specific purposes. On the one hand, as a cache to store recurring Query Results Involved in Supervisory Views of campaigns and agents; on the other hand it is used as DB for the presence and location of users; and finally for the * Asterisk * configuration storage (etc / asterisk /) as well as also of the configuration parameters involved in each module (campaigns, trunks, routes, IVR, etc.), replacing the native alternative of * Asterisk * (* AstDB *).

- PostgreSQL (https://gitlab.com/omnileads/omlpgsql): PGSQL is the DB SQL engine used by OMniLeads. From there, it materializes all the reports and metrics of the system. Also, it stores all the configuration information that should persist in time. Receives connections on its TCP port 5432 from components OMLApp (read / write) and from Asterisk (write logs).

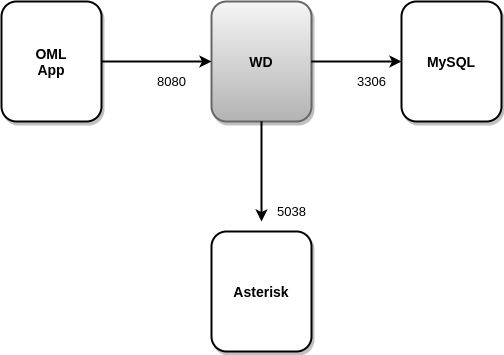

- WombatDialer (https://docs.loway.ch/WombatDialer/index.html): Para trabajar con campañas de discado predictivo, OMniLeads recurre a éste software de terceros llamado WombatDialer. Éste discador, cuenta con una API TCP 8080 sobre la cual OMLApp genera conexiones para aprovisionar campañas y contactos, mientras que WombatDialer recurre a la interfaz AMI del componente Asterisk a la hora de generar llamadas salientes automáticas, y checkear el estado de los agentes de cada campaña. Éste componente utiliza su propio motor MySQL para operar.

Deploy and environment variables¶

Having processed the previous exposition on the function of each component and its interactions in terms of networking, we move on to address the * deploy * process.

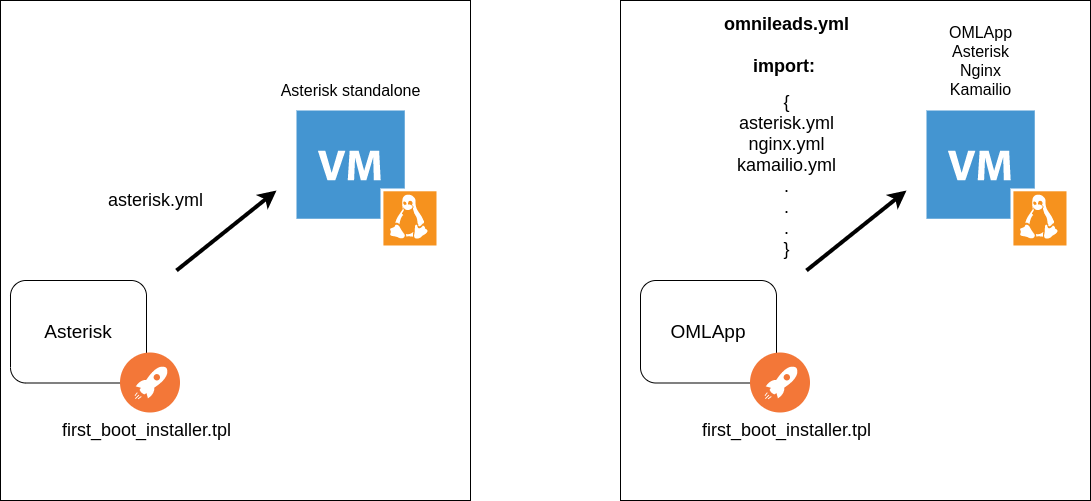

Cada componente cuenta con un bash script y Ansible playbook que permiten la materialización del componente, ya sea sobre un Linux-Host dedicado o bien conviviendo con otros componentes en un mismo host.

This is thanks to the fact that the Ansible playbook can be invoked from the bash script called first_boot_installer.tpl, in the case of going to the same as provisioner of a Linux-Host dedicated to host the component within the framework of a cluster, as well as imported by the Ansible playbook of the OMLApp component at the time ofdeploying several components on the same host where the application runs OMLApp.

Therefore, we conclude that each component can either exist in a standalone host, or also coexist with OMLApp in the same host. These possibilities are covered by the installation method.

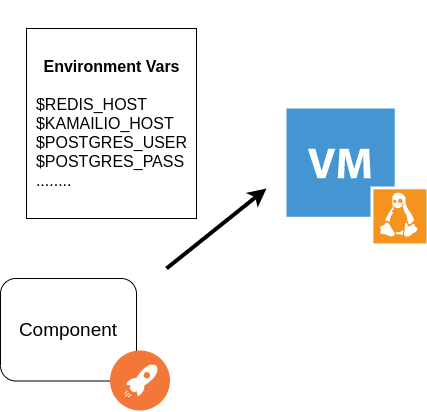

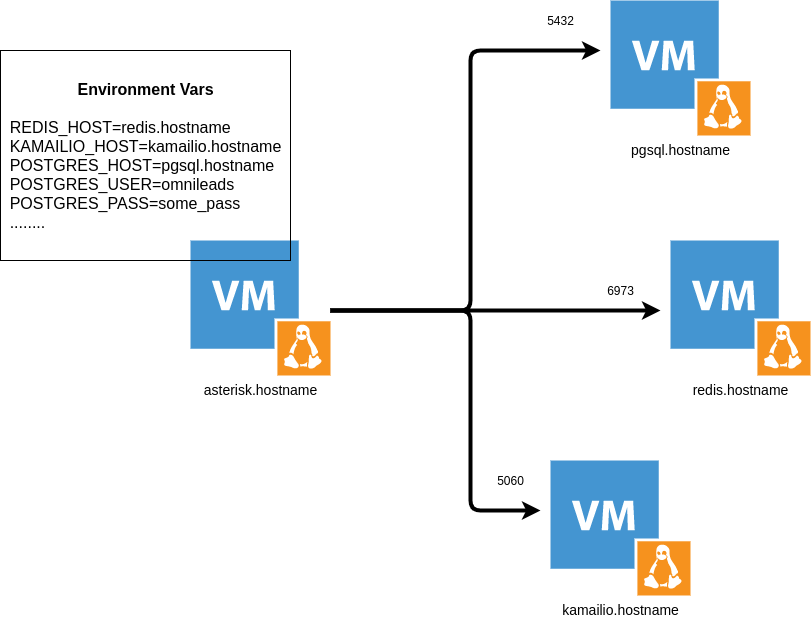

Dicho método de instalación está completamente basado en variables de entorno que se generan en el deploy y tienen como finalidad entre otras cosas, contener las direcciones de red y puerto de cada componente necesario para lograr la interacción. Es decir, todos los archivos de configuración de cada componente de OMniLeads, buscan a su par invocando variables de entorno de OS. Por ejemplo, el componente Asterisk apunta sus AGIs a la envvar $REDIST_HOST y $REDIS_PORT a la hora de intentar generar una conexión hacia Redis.

Gracias a las variables de entorno, se logra una compatibilidad entre los enfoques bare-metal y contenedores docker, es decir que podemos desplegar OMniLeads instalando todos los componentes en un host, distribuyendo los mismos en varios hosts o directamente sobre contenedores Docker.

El hecho de aprovisionar los parámetros de configuración vía variables de entorno, y además considerando la posibilidad de desplegar siempre la aplicación resguardando los datos que deben persistir (grabaciones de llamadas y DB PostgreSQL) sobre recursos montados sobre el sistema de archivos de Linux que aloja cada componente, podemos entonces plantear el hecho de trabajar con infraestructura inmutable como opción si así lo quisiéramos. Podemos fácilmente destruir y recrear cada componente, sin perder los datos importantes a la hora de hacer un redimensionamiento del componente o plantear actualizaciones. Podremos simplemente descartar el host donde corre una versión y desplegar uno nuevo con la última actualización.

Tenemos el potencial del abordaje que plantea el paradigma de infraestructura como código o infraestructura inmutable, planteado desde la perspectiva de las nuevas generaciones IT que operan dentro de la cultura DevOps. Éste enfoque es algo opcional, ya que se puede manejar actualizaciones desde la óptica más tradicional sin tener que destruir la instancia que aloja el componente.

The potential of turning to cloud-init as a provisioner¶

Cloud-init es un paquete de software que automatiza la inicialización de las instancias de la nube durante el arranque del sistema. Se puede configurar cloud-init para que realice una variedad de tareas. Algunos ejemplos de tareas que puede realizar cloud-init, son los siguientes:

- Configure a hostname.

- Installing packages on an instance.

- Running provisioning scripts.

- Suppress the default behavior of the virtual machine.

As of OMniLeads 1.16, each component contains a script called fisrt_boot_installer.tpl. Such a script can be precisely invoked at the cloud-init level, so that on the first boot of the operating system A fresh install of the component can be released.

As we have mentioned, it is possible to invoke the script at cloud VM creation time:

Otra opción, es renderizar como template de Terraform para ser lanzado como provisioning de cada instancia creada desde Terraform.

Beyond the component in question, the first_boot_installer.tpl has as a purpose:

- Install some packages.

- Adjust some other configurations of the virtual machine.

- Determine network parameters of the new Linux instance.

- Run the Ansible playbook that installs the component on the operating system.

The first 3 steps are skipped when the component is installed from OMLApp, thus sharing the host.